In late March, 2018, I was in Los Angeles presenting at the inaugural Virtual Medicine conference at Cedars-Sinai. Here’s the website and screenshot:

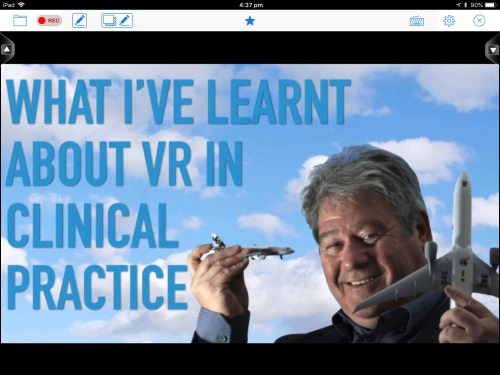

I had been invited over by faculty head Dr. Brennan Spiegel to present, TED-style, on my 17 years working clinically with Virtual Reality.

The timing of the conference was excellent and the day it finished Steven Spielberg’s new film, Real Player One, which features VR, was set to open. Indeed, my hotel, the Sofitel, featuring a huge poster on its wall.

Some 300 people attended in person while another 1000 viewed the live stream via 360 degree Samsung technology, so it could be viewed in a head mounted display. The stage was equipped with a large vanity screen which mirrored the very large projection screen behind and above the presenters. Below, you can see a picture of the setup which I took during a break, below. The control booth for the presentations can be seen at the very top of the picture, centre.

For my presentation, I was given 25 minutes very early in the two day conference, and prior had been asked to hand in my slidestack to be uploaded to a central server.

I never do that, given I present using Apple’s Keynote on a MacBook Pro and it contains a number of unusual fonts.

So I and a number of other speakers made arrangements with the technical support staff to use our MacBooks on stage, but not stand behind a podium. This required some form of remote control so we could wander the stage.

The videos of all the speakers will be uploaded soon, I’m told, but in the meantime, there are some lessons to be learnt which I want to pass on.

When tech support becomes a hindrance

While most other speakers used a clicker device to advance slides (and unfortunately also use the built-in laser light to highlight live slide elements), I elected to use my iPad to both control the slides, and as a mirror for my MacBook which was placed in presenter mode, meaning it displayed on the left the current slide the audience could see, and on the right was the next build. You can see an image, below:

The next build can refer to the same slide but the next action which takes place, or it could mean the next slide. Some presentation mavens speak about the maximum number of slides a presentation should contain. This is really 1980s uninformed thinking. I could have one slide but with 200 builds which could take twenty minutes to present, or 200 individual slides with no builds, just transitions between slides. Be wary of anyone who offers you unbending rules about presentations.

If you’re unfamiliar with presenter mode (most presenters I meet, whether using Keynote or Powerpoint don’t use presenter mode), you can see this mode in action, illustrated above, using the latest Keynote 8. On the left is the image the audience sees, and on the right is the next build the audience will see when the slideshow is advanced. It also shows the number of builds on the same slide still to come. In the image, above, the right “preview” screen it states Builds Remaining: 3. You can also see two other handy images: the current time on the left, and the elapsed time on the right. It commences when you hit Keynote’s Play button.

In previous versions of Keynote, builds remaining was represented by blue dots not numbers, displayed under the current screen (as shown in this screen shot of Keynote 5, below). Why don’t more people use presenter mode? Presenting at conferences using the venue equipment almost always prohibits its use.

Note, too, the use of Post it Notes graphics placed on the two slides. This was a great feature of Keynote up to version 5. It allowed you to remind yourself of the content of the screen when it was black. It’s like the presenter’s notes Keynote still maintains at the bottom of the screen, which I NEVER use. It takes up too much screen real estate, and it encourages you to read the slides. My guidance is that anything written in the presenter’s notes area (hidden from the audience) should really be on the slide itself. The Post It style note – which also is invisible to the audience – used to help me remember there was a movie or object under the black screen. I wanted to control when the audience would see the movie begin, often with a slow fade in, rather than its initial image. When Keynote on the desktop aimed for parity with iOS versions starting in Keynote 6, these Post It Notes were dropped in favour of yellow Comments notes to encourage collaboration with other Keynote users. They would not appear during the live presentation so couldn’t function as slide reminders.

The Post It notes feature was also useful if objects are to enter the screen from the sides and I want a cue to remind me of the blank slide’s content. In the screen movie below, I wanted to illustrate an experiment where objects movie across the screen, entering stage left and right, where the subject’s task is to say which one is in front and which one is behind (a test of depth perception). The objects need to move in off screen, but Keynote 8 can’t show in presenter mode they are “waiting in the wings” to make their entry. This is where the Post it Note feature could still be handy. To overcome this in Keynote 8, one could put the reminder in the presenter’s notes, but that area is then taken up for the entire show, not just the slide in question. You would have to be at the MacBook to hit the correct keyboard sequence (SHIFT+COMMAND+P) to switch off presenter notes.

But here is the problem with how Keynote operates: on both the current and next build screen, nothing can be seen. Keynote will not show the animation, but just as importantly it can’t show what’s not on the slide nor what will happen next – you look at two blank slides, see below. The screen movie of presenter mode shows the current time, the elapsed time, the builds remaining (counting down from 3, 2, 1) and the red and green progress bar at. the screen top edge – when in red, an animation is taking place, when in green, Keynote is ready for the next manual build.

My own vanity app setup to annotate and control slides during a presentation

I have written previously about the software I use to facilitate my own vanity setup on the iPad, called Doceri from SP controls. It uses an app on the MacBook (or Windows setup), and a complementary app on the iPad. With the two devices sharing the same wifi LAN (I bring my own router but not connected to the internet in the US – at home it does) I can also annotate the slides live, something the latest update to Keynote on the iPad allows for also, if you use the iPad as your presentation hardware.

I should add that I had created the slideshow using Keynote 7, but decided to live dangerously and update to the just released Keynote 8 the day before my presentation. I made a copy of Keynote 7 just in case something broke in 8.

When things don’t go according to plan

I want to point out a couple of issues which raised my level of arousal during my presentation which only I knew about. It may offer you the opportunity to learn from my experience.

My presentation was scheduled to begin very soon after the conference introductory remarks. Previously, when I arrived at the venue I’d gone to the control booth at the back of the auditorium and met with the tech group who checked my sound system, and wired me up via a back of the head wireless microphone. Because I teach dance, I’m very used to wearing a similar microphone behind the ear.

While I was waiting for my turn to go on stage, I did two things: I quickly ran through all the slides to make sure they were in correct order and no build was hiding behind another, something that can occur when you’re working on slides with multiple builds which cover other builds, where you can forget to build it out, to reveal another image or text.

The second thing I did was fire up the portable wifi router, and open the Doceri apps on the Mac and iPad. This was a small problem as the router expected to see the internet, and had to be told not to keep searching for a connection. At home this happens automatically, but in the US it seeks a 4G tower. A moment of doubt, but it soon did its job.

Once I was ready to go, I headed to the first row, waiting for the signal to head to the podium where the MacBook would be connected via my HMDI adaptor to the A/V system. Once hooked up, I placed it in presenter mode. A different group of tech assistants was there, and as the conference host was finishing his remarks with perhaps 20 seconds for me to take centre stage, I noticed one of the tech crew opening a browser window on my MacBook and accessing YouTube. In doing so, he had dropped me out of my Optus router needed for Doceri, and employed the venue wifi. Asked why this was happening, I was told it was a final sound check, performed by playing a YouTube movie.

I was stunned.

I always keep a blank first slide with a sound file on it for just such purposes, which then transitions to my opening title slide, as shown below – yes, I used a movie background as a way to say, “this isn’t going to be your usual medical Powerpoint.”

I pointed out to the tech people what had happened and quickly reset the wifi to my own wifi router with a few seconds to spare, and the Doceri connection was re-instated. But as I moved to the stage for my introduction, I looked down at my iPad which ought to have been in presenter mode, and it was now in mirror mode. Usually two small screen icons with the numbers 1 and 2 appear above the display, allowing you to begin presenter mode (screen 1, coloured blue, below) or switch to mirror mode (Screen 2, in grey, below) if you want to annotate the slide – annotations won’t work in presenter mode, as shown below.

But when I looked closely the choice of modes was not available – I was stuck in mirror mode. A quick glance at the MacBook over on stage right showed it to be in mirror mode, likely a result of the tech support person dropping out of Keynote to open a YouTube browser window.

I was stranded now centre stage, with no hope of taking time to leave the stage and fiddle with the Mac via its screen preferences. I had no way of knowing if the very large audience screen would show the guts of my Keynote (my worst presenter nightmare) or just an empty desktop – a preferred option. But the thought also occurred to me that by trying to setup presenter mode, the audience could possible see what I alone should see, the complete set of slides to come, which for me is a presentation faux pas.

So I had to be content with operating in mirror mode, with the iPad acting solely as a slide advancer. It could work in annotation mode too, but I had prepared my talk so this great feature would not be necessary. Things that would need to be highlighted were already pre-prepared with animations. I had rehearsed and rehearsed and so my not having presenter mode was unfortunate, but not a deal breaker.

What was much more serious is what went missing in mirror mode – my countdown timer which tells me how much time has elapsed (or if you prefer how much time remains), plus the actual Keynote clock time. The iPad does display real time but it’s tiny. I was somewhat panicked when I saw neither Screen 1 or 2 icons were present.

This was very alarming as I need to see the timer. Because I don’t rehearse my exact words like most do for TED-talks, when I rehearse I “play” with the slide narration, testing out various ways to tell my story, knowing the feedback from the audience will guide me. This helps keep the talk spontaneous and lively, not over-rehearsed and “flat”. Some slides I can spend more time with judging by the audience reactions, some can be glossed over swiftly. But I need the timer to keep me on course and finish early if can. If things are going well, I might be able to go to black (use the B key) and add some more to the speech as long as it’s on message – I always rehearse these extras for just such occasions.

Presenting at Cedar-Sinai Virtual reality in Medicine conference – here, holding my iPad Pro to control and annotate slides. There’s a Samsung 360 degree camera live-streaming, bottom left.

Only after I gave my talk did I learn that just in front of the large vanity monitor were three small lights – green, yellow and red which would flash eventually. These are cue lights to inform presenters of their timing situation. Essentially, I was flying in the dark. When I began my presentation we were already behind schedule and I really wanted to help bring it back, even at the cost of jumping over a slide, but alas I had no idea of the time, and I refuse to look at my wristwatch while presenting.

As if not having presenter mode wasn’t enough…

Once I settled down into a presentation rhythm and felt I had the audience onside, the next glitch occurred. I had quite a few movie clips to show, which I had downloaded from YouTube and converted to .mov format via specialist software. These had all worked on the MacBook in Keynote rehearsal mode, and also while playing on my external LG monitor and Epson projector via HDMI at home. Earlier this year, however, at a conference in Melbourne, two of my movies came up on the screen and refused to play. My initial thought at the time was that I had somehow corrupted the build in-build out timings, but when I got home they played fine on my system.

Lo and Behold the same thing happened to three movies that had worked fine in rehearsal. I tried twice to get them working, and when it was clear they would not, I proceeded to use my storytelling to inform the audience the idea behind the movies. When these situations happen, you just have to keep going, and appear professional – there is no room for thoughts like “I’ve flown 8000 miles to be here – this shouldn’t happen”.

As it turns out, I received two unexpected compliments during a break after my presentation. One was that I had “read the audience well”, and the other was: “What software did you use?”

I am still working on the movies-not-playing problem, trying to see what properties they have when compared to the movies that did play. This can be achieved using Quicktime’s Movie Inspector window (COMMAND-I).

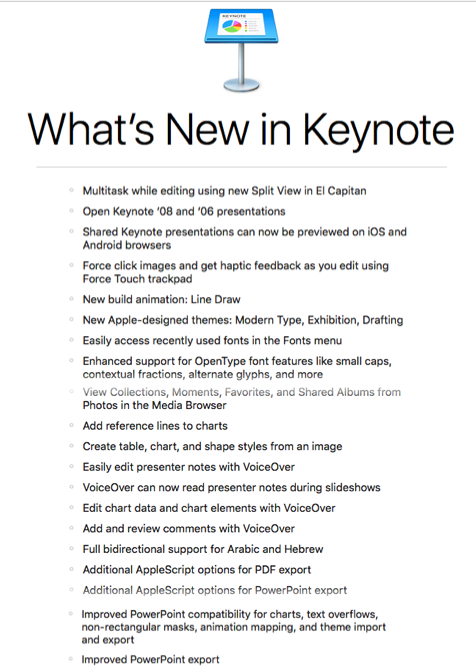

Extra features in Keynote 8

It’s always good to know Keynote is receiving updates, although I’m not sure the changes to 7 justified calling the update version 8, rather than a dot release to 7.4. Perhaps it’s to acknowledge the big change in iWork for iOS where the Apple Pencil can be used on inexpensive iPads to annotate slides. New infographics in mobile and desktop iWork apps to build donut charts, integration with Box for sharing content, and a new image gallery feature are the major additions, although I do miss the “Smart Builds” feature left behind in Keynote 5. See an example below, which I like so much I saved as a movie and now use it every so often. It’s actually two minutes in length, but to hopefully not trigger YouTube’s copyright algorithm, I’ve shortened it. Its purpose is to highlight the historical importance of the heart, when I do presentations about the brain. The importance of the heart remains firm in our folklore, so I combined movie posters with snippets of well known songs where the lyrics feature “heart”.

For a fuller description of the Keynote 8 features, see KeynotePro’s website Keynote pro and Keynote 8.